A 2-Day Intensive Reproduction of a 3.5-Minute AI Animation Short: Pitfalls and Solutions

You can read the Chinese version here: https://waytoagi.feishu.cn/wiki/X2AqwBFMaiqMpfk7kyVcGk9mnWo

Hello, I'm Kiki, an AI product manager with an artistic background and a passion for tinkering with various AI products.

On February 16th, OpenAI launched the Sora video model into the AI video model battleground, causing tremors in the tech world and beyond. As someone on the fringes of content and AI, I was both excited and shocked, thinking about how many entrepreneurs this move has outmaneuvered. Sora has outperformed its competitors in terms of duration, consistency, and logic, literally grinding them into the dust.

We originally thought that by 2024, mastering basic generation duration and consistency in AI video would be groundbreaking. Little did we expect AI to advance faster than my writing pace!

(My overdue New Year's manuscript is still sitting here, feeling outdated with Sora's release.)

So, I spent just over two days using AI to produce a nearly 4-minute animated short, generating 1,550 images, of which 154 were used; then produced 67 video clips, with 26 being used. In short, what you think of doesn't always translate to what can be drawn, and what's drawn doesn't always move as expected.

The story is simple, focusing on a new student at a Zootopia elementary school—Xiaobailong (Little White Dragon). Its appearance is somewhat unique, often inadvertently causing trouble for itself and those around it. Then, on New Year's Eve, a significant event unfolds...

I.Production Process

Faced with the dual pressures of time and money, I found a shortcut that suited me. (AI is a money burner, both for developers and users!)

Simply put, AI took care of the images and clips, but humans had to handle the rest. For now, AI remains a supportive tool.

Here's a brief overview of my workflow:

For this video production, efficiency was key, as anything else was too costly and time-consuming for me. How did I go about it? All content and materials related to the video were documented in Feishu.

Drawing on our experiences at ByteDance, where he was a director and I was in charge of storyboarding, crafting the storyline and script was a matter of minutes, with the time-consuming part being the generation process.

In terms of division of labor, Dayong was responsible for the initial content ideation and scriptwriting; my main tasks were image and video generation and editing.

My Midjourney prompts weren't anything special, mostly revolving around Pixar, Disney, and 3D styles. For example: "a small white Chinese dragon, anthropomorphic, Smile with surprise, wearing a school uniform, looking at a blurry gift box in the foreground, super close-up shot, camera focus on his face, 3D render, Unreal Engine, Pixar 3D style, blurry classroom scene, bright sunshine --ar 16:9 --niji 6." The focus was on "a small white Chinese dragon, anthropomorphic, 3D render, Unreal Engine, Pixar 3D style," with a 16:9 aspect ratio and niji 6 model. Adjustments were made as needed. Animation mainly utilized Runway for its control brush feature.

(At the time of my project, Runway had not updated its brush to automatically recognize areas, requiring manual selection.)

With Runway, it was purely luck-based for me. As I worked, if the technology couldn't realize a scene, I had to adapt the story accordingly. In essence, sometimes content has to make way for technology. Ultimately, the storyline was determined by what AI could generate, prioritizing technological feasibility. The final editing was done in Capcut, ignoring complex software like AE or PR in favor of speed.

Excluding manpower, the total cost of membership fees was as follows: Midjourney and Runway cost about two thousand yuan a year (Midjourney membership is approximately ¥2014 per year, and Runway is about ¥102 per month), averaging out to about ¥270 per month.

II. The Backstory

The backdrop to my involvement in this project was simple: I looked forward to AI tidbits shared by AJ, whom we respectfully called "teacher" in the "Path to AGI" group, every day. That particular weekend, I had planned to squeeze in some time to delve into Harvard's computer science basics to enhance my beginner-level programming skills. Therefore, I couldn't attend the Hangzhou event in person.

One day, I noticed AJ had posted something about an "AI Spring Festival Gala." My excitement was instantaneous. What kind of extraordinary event was this? Such original creativity? Such a rare opportunity? I immediately scanned the QR code to join the group and dragged Dayong along with me.

My reasons for joining the AI Spring Festival Gala were straightforward:

I have a deep interest in AI, believing it could realize my creative dreams.

Attracted by the innovative concept dubbed the first AI Spring Festival Gala co-created by humanity.

I am eager to collaborate with others who are equally passionate about AI.

I am confident in my ability to contribute, thanks to my experience in video and animation, especially my love for "Zootopia."

I am eager to personally experience AI technology, exploring its potential and limitations.

Thus, with a spirit of exploration, I embarked on this journey of AI short film creation.

III. Initial Conceptualization

Upon joining the group, I encountered a bunch of energetic peers, especially AJ, Dianzi Jiu (Electronic Wine), and Dianzi Cha (Electronic Tea), among others. Not only were they idea-rich, but they were also capable of executing these ideas. Despite busy work schedules and, for some, family commitments, their dedication to finding time for this project was pure passion.

After reviewing the overall plan and timeline, Dayong and I began brainstorming the script. We prefer quality content over flashy techniques, as it's the story that leaves a lasting impression. Indeed, both good content and presentation are indispensable. Excellent content attracts and retains the audience's interest, while outstanding presentation enhances this interest, making the work more vivid and appealing.

Many videos are incredibly creative and visually stunning, especially with AI's enhancement, making previously impossible or costly effects accessible to individuals and small teams. However, most creators still tend to use it more as a show-off tool. As a content creator, particularly one influenced by Disney and Pixar animations, I wanted to return to the essence of storytelling, allowing AI to serve its purpose as a tool to bring my envisioned content to life.

(There are many more wonderfully storied animated films that I won't enumerate here.)

So, we decided we must craft a complete, good story. It's not easy to conceive a compelling and heartwarming short story in a short amount of time. I thought of learning from Christmas advertisement shorts, known for their knack for telling a story within a few minutes. Essentially, Christmas and the Spring Festival share similarities, revolving around family, warmth, and reunion.

Ultimately, we chose to focus on the dragon's unique ability as the core of our story. This trait serves both as the dragon's weakness and the narrative's turning point, offering everyone a warm and surprising experience against the backdrop of the Spring Festival. The dragon's accidental fire-breathing incidents, leading to a series of reactions but eventually using this ability to solve problems, especially lighting fireworks to create a happy and warm atmosphere, align perfectly with the festive mood of the Spring Festival, doesn’t it?

IV. The Biggest Challenge Encountered in the Content

After finalizing the outline, we began writing the script and creating storyboards with Midjourney. However, I quickly faced a formidable challenge—time constraints. Initially, I had overly optimistic expectations about the time I could dedicate to this project. As the deadline approached and Electronic Wine, one of our respected mentors, urged us to progress, I began to doubt whether I could continue. I felt a mix of anger and self-blame. With other important tasks awaiting the next day, there seemed to be no possibility of advancing the project without sacrificing sleep.

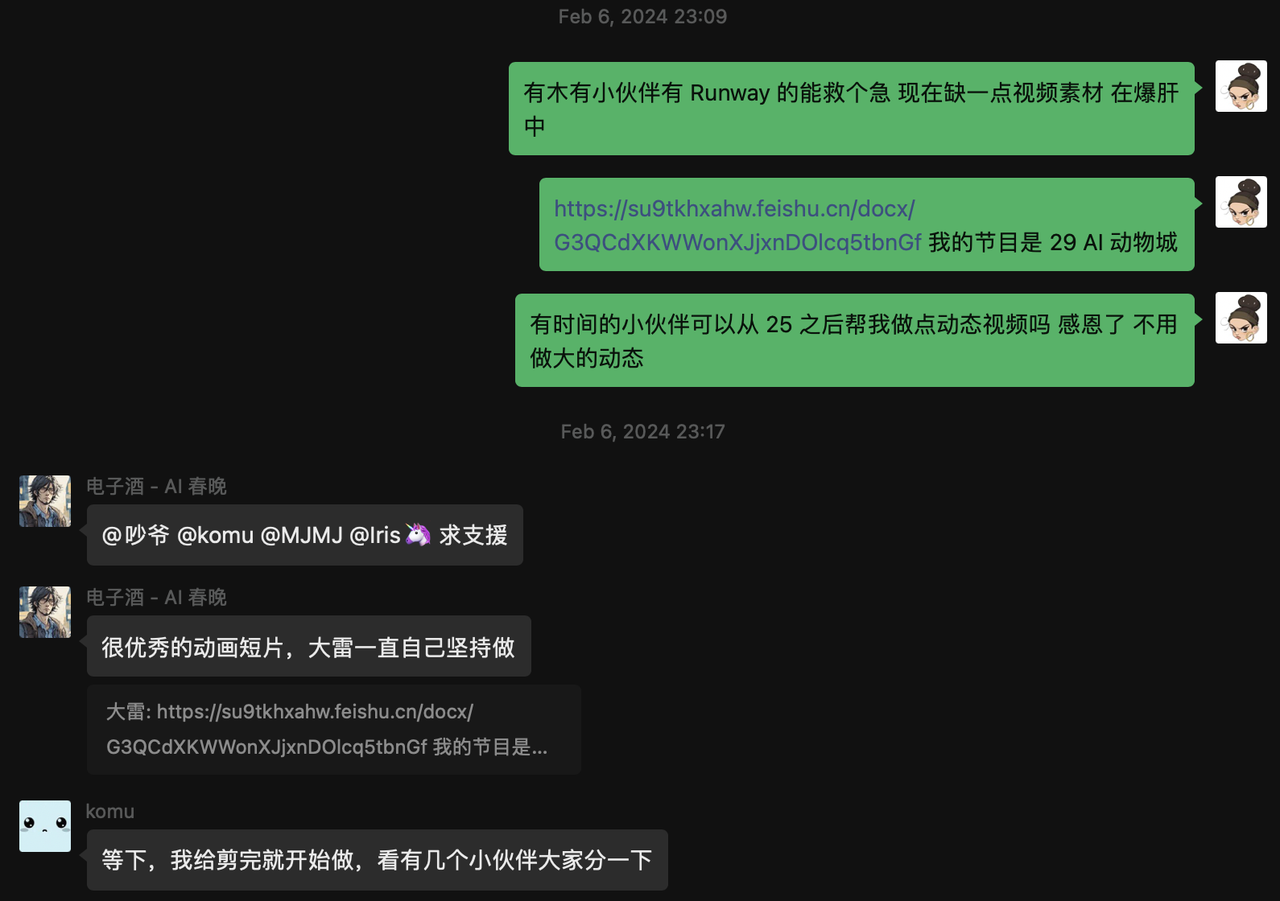

At this critical moment, Electronic Wine offered timely support:

His words opened the floodgates of my emotions, providing an outlet for my stress. I confided in him about my predicament and feelings of despair. Subsequently, I sought help in the AI Spring Festival's main group and shared my Feishu document with editing permissions open, hoping to attract anyone with the time, ability, and willingness to assist.

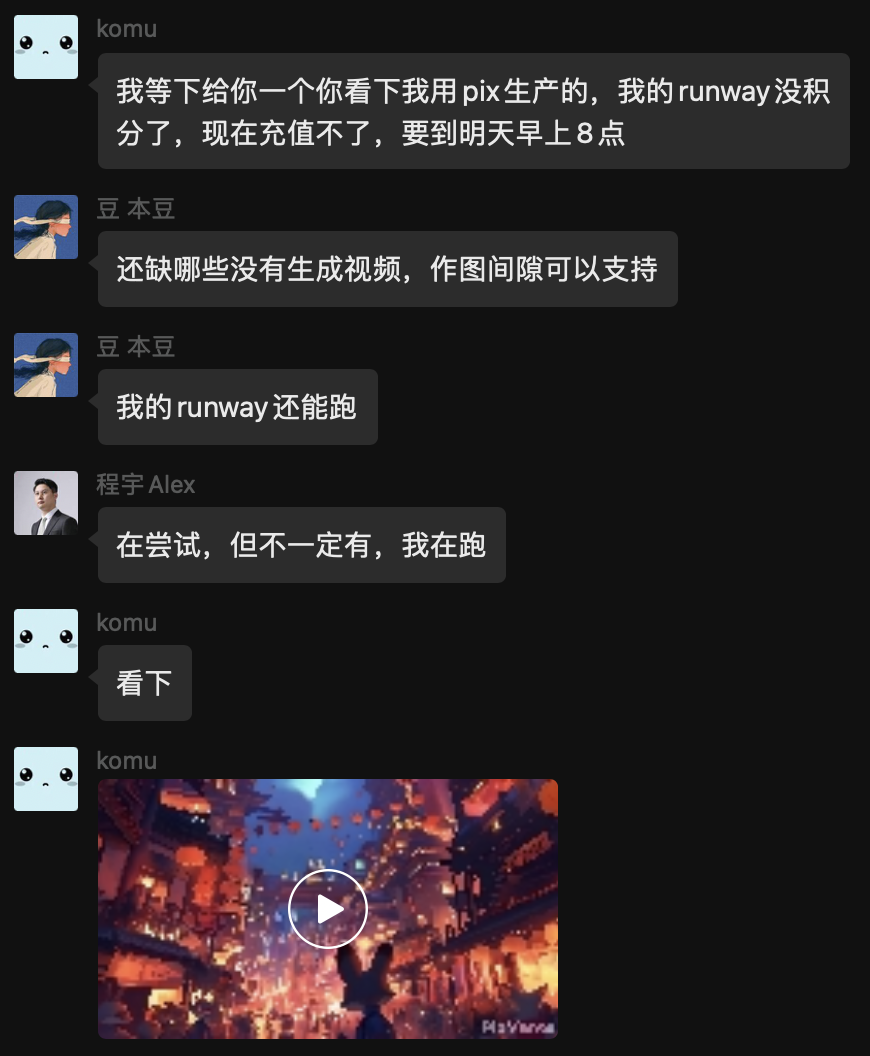

The response to my plea for help was immediate and overwhelming, far exceeding my expectations. I quickly assigned tasks, and everyone generously used their tokens to support me.

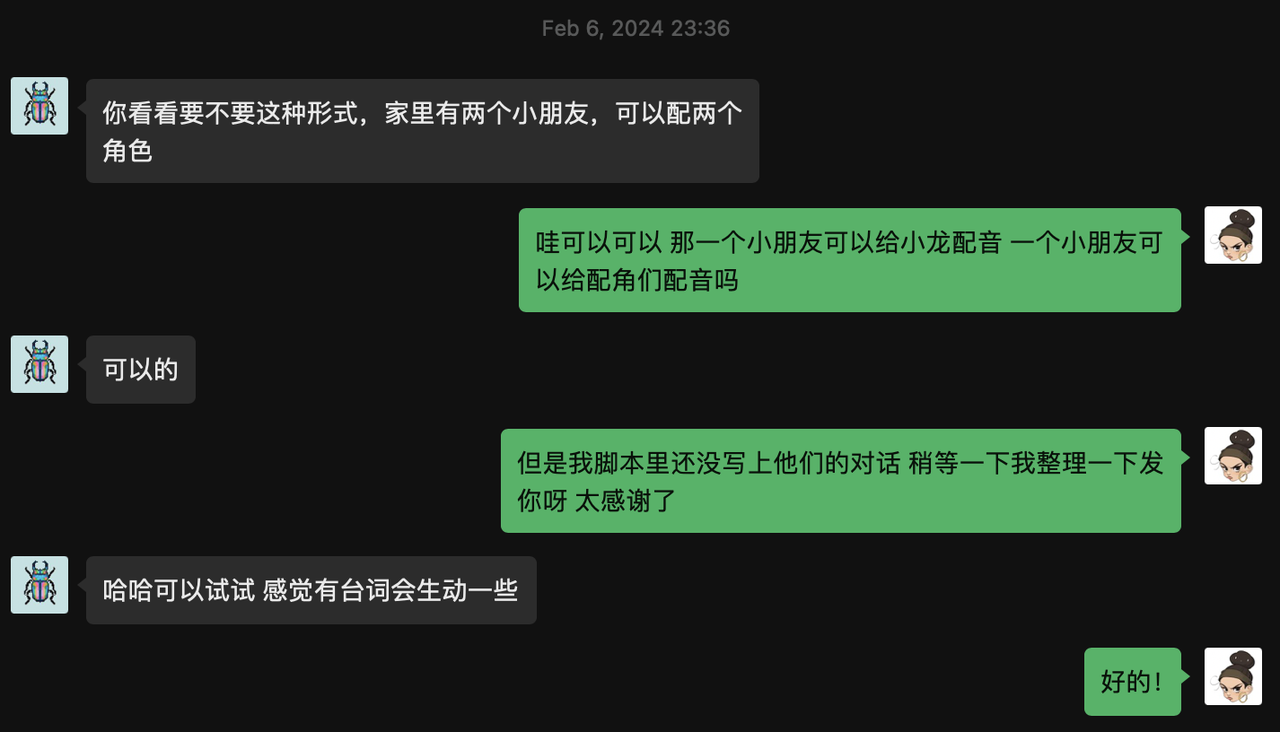

Even AJ, another esteemed mentor, volunteered to have her children try voice acting. Initially, I hadn't considered voiceovers and was aiming for a silent film. But with such goodwill, why not?!

In the end, all voiceovers were performed by AJ and her children, which was absolutely fantastic.

To minimize everyone's token and time expenditure, I set some simple guidelines to ensure clarity about the creative direction. As I gained experience, my production speed increased. With a script and previous storyboard experience, I significantly improved the efficiency of selecting and creating video segments. Eventually, at 2:24 AM, I completed the first version of the project.

The next day, finding some spare time, I continued to revise and add new content, aiming to enhance the final presentation. Reflecting on the journey, without the timely aid of AJ, Electronic Wine, and the enthusiastic online community, this project might have been aborted.

Ultimately, this work was honored as the best animation program at the AI Spring Festival, which moved me deeply. The unity and initiative demonstrated are beyond words, perfectly capturing the warmth and togetherness of the Spring Festival. Its creation is attributed to every friend who supported and helped:

AJ and the children, Damon, Cheng Yu Alex, AI Yi Ran, Komu, and Xiu Xiu.

Without their selfless contributions, this proud achievement would not exist.

V. Addressing Technical Challenges

After discussing the creative process, I'd like to share my experiences in overcoming technical challenges. AI's greatest advantage lies in significantly improving efficiency and drastically reducing production costs. Although many technical limitations exist, I feel that at least 60% of my vision was realized. Using traditional 3D animation methods, it might have taken me over a decade to learn and produce, from modeling to rendering to editing. AI video generation tools have allowed me to materialize abstract ideas while enhancing the visual quality of the videos.

However, the limitations of technology are also quite apparent:

Maintaining Consistency

Facing the challenge of consistency, especially when creating narrative videos entirely reliant on AI, like my Little White Dragon story, maintaining character and scene consistency is nearly impossible. The current technological limitations mean that unless a shoot-first-then-AI-paint approach is adopted, pure AI-generated videos struggle to achieve an ideal level of consistency. This is particularly challenging for stories involving fictional creatures, like mine.

To mitigate these limitations, I adopted several strategies:

Firstly, I tried to maintain the key features and outlines of characters in narrative content as consistently as possible. For instance, for my protagonist, Little White Dragon, I ensured it always presented its youthful, Disney-Pixar-style white dragon image. I also maintained the consistency of character positioning; for example, Little White Dragon always stood on the right facing left, with other characters mostly on the left facing right, reducing audience difficulty in recognizing characters.

Additionally, I reduced the number of characters the audience needed to remember. In my story, besides the protagonist, Little White Dragon, other minor animal characters mostly appeared only once, easing the audience's memory load. For creators wanting to try making AI videos centered on specific characters, like a couple, I suggest focusing on these two characters, reducing the appearances of side characters, and reinforcing audience impressions of the main characters through repetitive visual features. Such strategies can somewhat compensate for AI's lack of consistency, making the narrative smoother and more coherent.

Unpredictable Outputs

Working with AI-generated content is like Forrest Gump with his box of chocolates; you never know what you're going to get next. Take Midjourney, for example. Even with a clear image in my mind, it acts like that friend who's always daydreaming, surprising you with outcomes you never anticipated, despite your detailed instructions. To achieve coherence between the visuals and the story, I found myself constantly rolling the dice, hoping to land on something that barely meets my expectations rather than a complete misfire. (But for efficiency's sake, I had to bite the bullet and go with anything that was remotely acceptable!)

Video production was an even wilder ride. Take expression control, for instance. I was aiming for Pixar-level finesse, but what AI gave me looked more like a stone figure melted into a puddle. I expected Little White Dragon to shyly wave in the classroom, but instead, I got a rigid motion that was so off it was comical.

This made me think that perhaps our future commands to AI shouldn't be limited to text. As technology advances, we might be able to use "sketches" or "short clips" to guide AI, allowing it to capture our creativity more accurately. For someone like me, with a background in drawing, wouldn't it be more direct and efficient to communicate with AI through simple sketches?

For video products, we could try letting users employ preset materials with some motion instructions, like having a character walk from one side of the screen to the other, locking its path with keyframes. While this approach might seem a bit Stone Age in terms of animation production, it at least could stabilize AI output and better match our expectations.

Limitations on Character Numbers and Movement Range

I've found that when generating with Midjourney, it's best not to include too many characters; "too many" might even mean more than two. Creating humans or animals is manageable, but anthropomorphic characters, like my Little White Dragon, feel almost as hopeful as winning the lottery. For instance, generating a simple scene where Little Dragon breathes fire on a gift box held by a little rabbit was an ordeal for MJ.

(The results were beyond comment.)

Either the animals weren't anthropomorphic, or the dragon was massively oversized. Of course, I understand that, especially for Chinese dragons, the data samples are quite scarce. Trying to create a satisfactory image of Little White Dragon using MJ posed a real challenge. As for video generation, achieving larger movements like turning heads, shedding tears, raising hands, or more dynamic facial expressions still stretches current technology. It requires more advanced technology, richer data, and greater computing power.

My strategy is to avoid making videos that require extensive action. If unavoidable, I try to create scenes involving only minor movements, then enhance expressiveness through several methods as much as possible. Although this doesn't fully compensate for the lack of larger actions, it at least makes the visuals and narrative less jarring.

Supplementing with Text

By adding dialogue and scene descriptions, we can effectively compensate for details and depth that AI-generated images fail to achieve. This method helps the audience better understand the background of the scene and the psychology of the characters, making up for visual shortcomings.

Supplementing with Sound:

Sound is another element that can greatly enhance the atmosphere and immersion of a video. I enriched scenes with appropriate sound effects, such as the ambiance of a city, voices and car horns on the streets, school bells and children's chatter, and the chirping of cicadas outdoors. These detailed sounds fill the gaps in expressiveness of the visuals, making the video more vivid and realistic.

Music selection is also crucial for enhancing the overall effect of the video. Fortunately, CapCut offers a rich music library, allowing me to find background music that fits perfectly with my video content. The harmony between the video's rhythm and the music is an aspect that cannot be overlooked. Given the relatively simple structure of my story, I focused on coordinating the visuals with the rhythm of the music to improve the viewing experience.

In summary, by skillfully combining textual descriptions and sound elements, we can effectively supplement and enhance the expressiveness of AI-generated content, offering audiences a richer and more immersive experience visually and auditorily.

Conclusion

In this short-term experiment, the short film I produced was more like a dynamic picture book. Many of the problems and challenges encountered during the production process were gradually discovered through continuous creation, examination, and reflection, which I couldn't have anticipated from the start. By sharing my experience, I hope to provide some references and guidance for those dreaming of creating their own short or feature-length works with AI technology, helping you avoid potential pitfalls and save time exploring solutions.

I firmly believe that future AI video technology will open up broader spaces for imagination and innovation. Not just Runway, but also not-yet-public tools like Sora, Stable Diffusion, Stable Video, DomoAI, Pixverse, Pika, and others, each with its own unique features, serve different needs and audiences, offering a variety of creative possibilities. However, choosing which software to use is not the most crucial part. The truly suitable tools are those that allow us to express creativity smoothly and realize what we envision. Whether through complex or traditional methods, like 2D hand-drawing, stop motion animation, or even film, any tool or method that accurately presents our stories is commendable.

In the world of content creation, quality content is always at the core. I look forward to using mature technology to recreate this short film in the future with the advent of Sora and more advanced AI video production tools.

Thank you for reading this far and witnessing this journey of exploration and creation together.